Abstract

Today higher education in Russia is shifting to the digital form of presentation and processing of information. Emerging technologies make it possible to provide a qualitatively new level of organization and conduct of educational process in higher education. There is a need to develop new options for the organization of the educational process, including, qualitatively new methods for assessing the knowledge. Traditional testing conducted with the use of computing facilities in the conditions of an active electronic educational environment of a university is an affordable means of measuring the knowledge, the skills and, in some cases, the abilities of students. In the process of its development and improvement of computer technology, the evaluation of student study results offers more and more new testing methods. In the process of development and improvement, computer technologies for assessing the student study results offer more and more new testing methods. However, along with this, one has to take into account the new risks associated with the peculiarities of their implementation, the risks of incorrect assessment of the learner's knowledge. The article provides a classification of such risks, an analysis of the causes of their occurrence, and suggests methods to minimize their impact on the assessment results, which ultimately increases the reliability of using a computer-based testing system.

Keywords: Computer-based testingassessment tools fundtesting riskstesting reliabilitytesting validity

Introduction

Monitoring the study outcomes is one of the most important tasks of the educational process. Although there are still descriptions of testing technologies based on filling out the hardcopy questionnaires (Rubtsova, 2017) in the modern scientific literature, the use of educational process management systems, for example, Moodle, allows automating both the testing process and the processing of its results, which is especially important, making it interactive and implementing it in the remote version as well. In addition to the traditional for higher education exams and pass/fail tests, as a tool for assessing the knowledge and in some cases also the skills and abilities, education test conducted in computer form can be used, which involves a set of tasks evaluated on a pre-developed scale. At the same time, measures should be taken to prevent distortion of the results (cheating, prompting) and leakage of information about the contents of the tests. The test quality criteria are reliability, validity, discriminatory power. In addition, it is necessary that the test ensured objectivity - a characteristic that combines reliability, validity plus a number of aspects of a psychological, pedagogical, ethical, value-based character, as well as the safety of using the results of the test.

Problem Statement

By the safety of computer-based testing, we mean a set of technical and organizational measures that help protect information resources from unauthorized use (Nevdyaev, 2002). Despite the fact that the direct introduction of information technologies in the educational process of higher education began almost immediately after their appearance, in the pedagogical community there is still not enough clarity regarding a simple question: how can they be used not only as a subject, but as a learning tool (Smolyaninova et al., 2019).

Organization of computer-based testing requires a higher educational institution to have a developed infrastructure in the form of a working electronic educational environment, a sufficient number of student workstations (display rooms) equipped with computers, as well as the corresponding competence in the field of testing and use of information technology among teaching personnel (Smolyaninova et al., 2019). In the case of using interactive and online learning technologies, the corresponding tools are also required for the students themselves. Now, in most cases, these are mobile devices with the appropriate software.

We have to take into account the need for a higher educational institution to carry out additional administrative activities that are associated with the introduction of appropriate changes to the work programs of disciplines, class schedules and exams.

Finally, it is necessary to ensure testing validity. For this, it is necessary to take measures to protect banks of test questions, prevent the possibility of cheating, the use of additional technical equipment and the substitution of the test-taker.

Research Questions

The following issues were considered during the study:

Which composition of the electronic educational environment of a higher educational institution provides the ability to ensure the safety of computer-based testing?

How to evaluate the quality of a computer-based test as such?

What threats accompany computer-based testing?

What opportunities does the Moodle system provide for organizing computer-based testing?

How to organize a bank of questions of a testing system?

Purpose of the Study

Investigation of the possibility of ensuring the computer-based testing safety for an objective assessment of the knowledge, the skills, and abilities of students in the existing infrastructure of computing facilities of a higher educational institution.

Research Methods

Requirements for the Higher Educational Institution Infrastructure

In accordance with the requirements of existing education standards, a higher educational institution should have an electronic educational environment. Unfortunately, the legislator did not provide an exhaustive wording of this term. Using the higher educational institution where we teach as an example, this means a set of display rooms in all educational buildings included in the local intranet with access to the Internet through gateways. Students on the territory of the higher educational institution use the Wi-Fi network. All software is licensed. The university has a learning management system such as Moodle that supports mobile devices, among other things. The general security policy is standard within the educational environment and is regulated by a set of password-protected user accounts with the appropriate access rights (administrator, faculty member, student, etc.). When conducting qualification activities, depending on the specific situation, access to the system may be limited within the intranet, a specific display room, or even a specific computer (technical protection measures). In addition, special procedures have been developed to protect against student unauthorized access to additional information (organizational measures).

To identify a specific user, the Moodle system additionally allows using their photo, which is critically important, for example, during student exams, but for unknown reasons, this opportunity has not been implemented at the higher educational institution.

The curriculum for applied and business informatics includes several disciplines related in one way or another to the training of algorithmization and programming. The graduates are required, in particular, to master high-level programming languages, while their initial level of training, monitored by the entrance examinations, does not provide for the verification of the availability of relevant knowledge, skills, and abilities. As a result, issues related to programming require special attention when studying in junior years. In the traditional version of the training, disciplines related to programming are supported by a lecture course, laboratory course conducted in the display room, practical exercises, and the results of mastering the necessary competencies are checked during the course work. Due to the introduction of interactive technologies, the electronic educational environment allows, while maintaining the volume of the classroom load, significantly improving the programming training due to the appropriate organization of student independent work (SIW). The use of a learning management system includes additional resources in the form of SIW monitoring. So, monitoring the progress of the laboratory course, student’s preparation for project work and writing a term paper is implemented through sets of sequentially completed tasks in the Moodle system. The results of mastering the theoretical material are monitored using the built-in computer-based testing tools in Moodle. The posting of current grades and the generation of student ratings in the electronic educational environment can further stimulate students and prepare them for midterm assessment, which is also carried out in the form of a computer-based test. All this allows the teaching personnel and the management of the higher educational institution having a real picture of the state of the educational process. Therefore, the composition of the infrastructure necessary for the functioning of a computer-based testing system is known.

Test Quality Assessment

Computer-based testing allows organizing almost simultaneous control of knowledge of a large number of learners. At the same time, faculty members significantly save both student time and their own, which would otherwise be spent checking test papers. The use of testing allows measuring the current level of student knowledge, make the necessary adjustments to the educational process, change the pace of training and, as a result, achieve better results. Unfortunately, a computer-based test today is not an ideal technological tool for measuring the study results. To assess the quality of the test, its characteristics such as reliability, validity, and discriminatory power are used (Muratova, 2016).

The reliability of a test task means an assessment of the accuracy of its measurement and the stability of its results to the effects of random factors (Shkerina et al., 2017). When evaluating test results using the re-test method (Chelyshkova, 2002), which involves multiple testing using several parallel test forms, there is an expression for calculating the reliability coefficient by the method of the re-testing (Chelyshkova, 2002). A number of similar methods are described there, for example, the test splitting method for a single test, the Kuder-Richardson method, as well as the expression for determining the required length of the test.

The validity of a test usually means a certain characteristic of what the test measures and how well it does it. The most common causes of monitoring invalidity are cheating, prompting, tutoring, leniency, excessive meticulousness, use of a certain method in the absence of proper conditions. In such cases, the results of the monitoring are inconsistent with the assigned tasks. In order to increase the validity of pedagogical control, expert assessments of control material are used to bring the requirements of the curriculum and the concept of knowledge to conformity. Moreover, there is no single characteristic of validity (Muratova, 2016). Further, we will use the following definition: validity is a test characteristic that reflects its ability to obtain results that are consistent with its goal and justifies the adequacy of decisions taken (Kim, 2007). As a numerical characteristic of validity, one can use the correlation coefficient of the test task results with the test score (Muratova, 2016).

Finally, the discriminatory power of a test means its ability to differentiate test participants to the best and the worst. It can be considered as an estimate of the proportion of correct and incorrect answers (Ryabinova & Bulanova, 2016). It is noted that this is not the most important factor in evaluating a test task, but if both the validity coefficient of a particular task and its discriminatory coefficient do not meet the specified criteria, then such a task needs to be removed from the test (Muratova, 2016).

We assume that the correct assessment of all three indicators of the testing quality will increase the objectivity of the test, quantitative indicators of which are not yet developed.

Test results using the Moodle system can be used as initial data to calculate the mentioned coefficients (Yubko & Efromeeva, 2019). The old version of the system provided a large set of statistical data for conducting relevant studies. Unfortunately, the transition to 3.x.x version was accompanied by a significant change in the technology for collecting statistics. Therefore, at the moment, these opportunities are limited. Nevertheless, it can be argued that methods for assessing the quality of testing are currently known and can be used.

Testing Security Threats

The main source of threats for conducting computer-based testing as a means of an objective assessment of the study results is the information technologies themselves. Part of these threats is general in nature and practically independent of the direct organizers of the educational process. So, for example, the Internet has a large number of corruption-related sites that directly affect the level of education in the country. A Google search query "buy a university diploma" gives a huge number of links to sites offering such a service in a split second. The producers of such sites offer to provide you in two or three days with a diploma from any university in the country proudly announcing “Do not doubt our company, as the sale of university diplomas is what we have been doing for a long time” (Sale Of Educational Documents On All Of The Territory Of The Russian Federation, n.d.). Similar services are provided by another group of sites, which is also easily found on request, for example, “assistance to students of the St. Petersburg State University of Aerospace Instrumentation (SUAI)”. These sites touchingly advertise the SUAI claiming that it is "the largest scientific and educational center for training, retraining and advanced training of programmers, engineers, economists, lawyers", offering implementation of any educational work including “turnkey exams” at a reasonable price. It is not known how many people used the services of these frauds, but the harm done by them is commensurable to the harm done by drug dealers. The fight against these threats should be conducted systematically at the level of a higher educational institution or even the state.

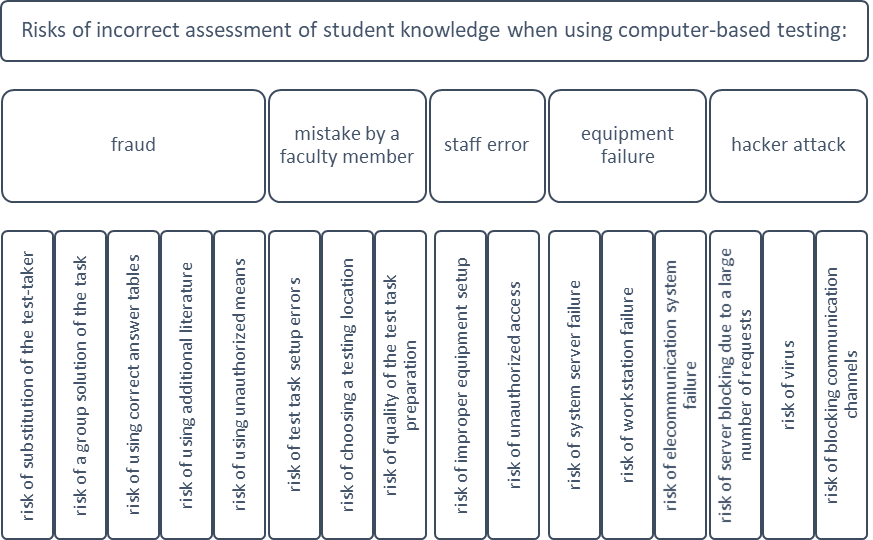

The work (Kononova et al., 2011) listed the main threats to the quality of computer-based testing at the level of a faculty member (Figure

Over the nine years that have passed since the publication of the article (Kononova et al., 2011), in practice, we have encountered many of the risks listed in fig.

When working with extramural students, the risk of substitution the test-takers turned out to be high. Considering the smaller volume of classroom activities compared with the intramural form of study, it is not always possible to remember the faces of all the students in the group. Since the number of students in the lecture hall is large enough, the examiner must check the photos in the student record books.

The risk of a group solution of the task comes down to trying to prompt or get a prompt. The main tool to combat this type of risk is disciplinary. The rules of the testing should include a clause allowing the examiner to give an unsatisfactory grade for conversations during the exam. In addition, the total time spent on the test can be reduced so that the prompter was wary of being distracted from their test.

The risk of using correct answer tables is the main threat in the fraud group. For example, on the site (All questions) there is an electronic version of the test tasks for the Programming techniques discipline prepared, probably, by one of the students of group 8026. Another example is a file (Freedocs, n.d.) with questions for another discipline. Having such a file on any electronic device and using the usual search system, you can quickly find the answer recorded in the file by the first words of the test task. It is suggested to use a typical construction of the question of the test task as a method of dealing with these tricks, for example, “There is the following fragment of the program:”. Then the results of the search for answers to a specific question will be very voluminous, which will require additional actions from the test-taker. The risk of using additional literature is associated with the possibility of access using the mobile Internet to unauthorized sources of information. As a result, it is necessary to prohibit the use of any gadgets during testing in the lecture hall.

There have been several cases of using radio devices to communicate with a consultant outside the lecture hall (risk of using unauthorized means). While ensuring the proper order during the tests and, as a result, silence in the lecture room, such situations could be quickly localized.

A group of risks associated with the errors by the faculty member and staff also influenced the quality of testing. As a rule, the examiner is responsible for organizing the exam and setting up the test. In the normal course of the exam, the examiner manages to control the process of conducting the test, keep the necessary documentation, and even answer some questions of students. Problems begin in the event of an emergency. For example, if a student believes the test task is incorrect. The faculty member is forced to be distracted by a conversation with the student, and at this time the rest of the test-takers have the opportunity to safely engage in fraud. There have been cases when such situations were deliberately planned by the group and organized by it taking into account the availability of question and answer files.

As measures to combat these risks, a set of requirements for conducting computer-based testing in the lecture hall was formulated.

First, two examiners should carry out testing: a faculty member and their assistant. In this case, the risk of distracting examiners from direct monitoring of the exam is significantly reduced.

Second, in practice, most students store their identification data (username and password) in their smartphones and at the beginning of the exam they are forced to use them to enter the system. Therefore, after the student enters the testing page, it is necessary to prohibit the use of any gadgets and the opening of any additional windows on the screens of working displays. To do this, before starting the test, the student should see the following warnings:

The use of any technical devices, print media or notes during the exam is prohibited. If you want to write something down, ask for a piece of paper from the examiner, and you should have your pen or pencil.

Before starting to answer the test questions, turn off your mobile phone and put it in your bag. You are only allowed to get the phone out after you leave the lecture hall.

In the event of a controversial situation, the test-taker shall write their comments in the forum, which is available on the discipline page in Moodle, and notify the examiner about it. Then immediately after the test is completed, the grade is not entered in the grade sheet. The student waits for the end of the exam, and after that, with the participation of a faculty member, an individual analysis of the situation is carried out. Based on its results, a final decision is made on the grade.

The risks associated with hardware and software failure (hacker attacks) also manifested themselves. Truth be told such situations were quite rare. Unfortunately, during the exam, it is difficult to reduce the risk of their occurrence. In case of failure of the display station, transferring the student to another computer and running the test again is enough. Failure of servers or telecommunication equipment leads to the need to cancel the exam and reschedule it to another day.

Obviously, a set of the main risks that arise during computer-based testing is known. To combat them, there is a set of technical, organizational and administrative methods, and the latter should be documented in the form of regulatory documents.

Moodle Testing Features

From our point of view, the most important task of the faculty member during the period of studies is to ensure the uniformity of student work. To do this, a number of measures is being taken that stimulate the student to complete tasks in time and pass intermediate tests. Moodle tools allow monitoring over the fulfilment or non-fulfilment of current positions (tasks) and automating the procedure for admitting to the next ones. It is convenient when the tests correspond to the lecture material covered over a certain period of time. In this case, the student and the faculty member are given the opportunity to evaluate the current study results and, if necessary, make corrections to the specific implementation of the educational process. Testing does not exclude other forms of knowledge control in laboratory, seminar and practical classes. It is an addition to the already existing control and measuring materials and becomes a component of the assessment tools funds.

Testing, with the appropriate organization, can be used as an alternative to traditional tests and exams. All these considerations compel us to relate to the organization of the computer-based testing process from the standpoint of ensuring the safety of its use as an objective tool for assessing the study results, which becomes especially relevant when shifting to the knowledge competence and knowledge intensity of production (Bodrunov, 2016). From our point of view, without a qualitative assessment of the graduate knowledge, it is impossible to ensure the demand for specialists in the implementation, support and use of high-tech solutions based on information technologies in science, manufacturing, economic activity, trade and business in general.

Regarding the purpose of the application, education tests are divided into norm-referenced and criterion-referenced (Kostylev & Kutepova, 2017). A norm-referenced test allows ranking the test-takers by the level of knowledge, i.e., comparing the test-takers academic achievements with one another. A criterion-referenced test allows identifying the degree of assimilation of a certain section of a given subject by the test takers. The developer of the testing system is faced with the task of combining the two types of tests in single assessment technology. It is necessary to take into account the fact that the set of test questions is usually of a criterial nature and is associated with indicators formed in the process of studying the discipline of competencies (should have the knowledge, should know how to, should possess, etc.). To ensure control over the current study results, the dynamics of their changes, the assessment of the effectiveness and efficiency of the organization of the educational process, it is required to use a sequence of tests performed throughout the entire period of studying the discipline.

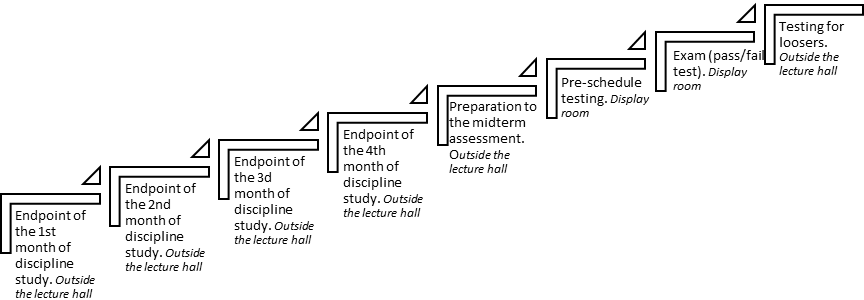

In our practice, over the course of a semester, we use monthly endpoint tests, tests for independent study for midterm assessment, pre-schedule testing, computer-based scheduled test or exam and the so-called "testing for losers" designed for independent preparation for the exam of a student who received an unsatisfactory grade during the midterm assessment (Figure

The format of the tests for preparing for midterm assessment, pre-schedule testing and exam test (pass/fail test) is the same, taking into account the fact that in each session a set of questions in the exam paper is randomly selected. The first of these tests is intended to familiarize the student with the organization of the tests. The results of this test received by the student are not taken into account.

The exam is carried out strictly in accordance with the schedule of the exam period. A separate schedule is prepared for the pass/fail test and the pre-schedule test. The pre-schedule test and the exam (pass/fail test) differ only in the set of specific randomly selected test tasks. Their number on the exam paper ranges from 15 to 40. On average, one to three minutes are allocated for one test task. From here, the total testing time is determined. The final score depends on the percentage of correct answers and is calculated on the scale where 90% -100% means excellent, 80% - 90% - good, 70% - 80% - satisfactory, less than 70% - unsatisfactory. The best of the test attempts is taken into account, and each attempt is allowed no more than once a day. Testing results are displayed on a special board on the discipline page in the learning management system.

The testing for losers is intended for self-study of the discipline at home. It begins after the exams in all groups of the same year are completed, usually after the end of the exam period. The exam paper includes 60 or more test tasks, the attempt time is limited to one and a half hours, the number of attempts is unlimited, but each of the subsequent attempts starts no earlier than 48 hours after the end of the previous one. In some cases, the self-study mode built into Moodle may be used. The testing for losers is considered passed if the student correctly answered 90% of the questions. In this case, the student has the opportunity to make the next attempt to pass the exam. All of the listed test sequences are clearly maintained by monitoring the tasks’ results by the Moodle means.

As a result, it can be argued that there are all the technical and organizational capabilities to improve the reliability of computer-based testing.

Creating a Question Bank

Many researchers note the resistance of the faculty to the introduction of learning management systems and computer-based testing in the educational process. Several reasons are cited, including the low informational and communicative competence of faculty members, the difficulty of integrating the curriculum into the educational process in the Moodle format, the misunderstanding of a specific didactic task that can be solved using the very learning management system (Smolyaninova et al., 2019). Many of these arguments can be accepted. Indeed, nobody gets much pleasure from the introduction of a learning management system as such. This is an additional work imposed on faculty members, which, at first glance, does not bring them much benefit. But, if you look at the issue under discussion as a procedure to automate control over the educational process, the introduction of computer-based testing allows, in the end, freeing the faculty members from part of the daily routine.

From our point of view, the bank of test questions should be divided into categories and subcategories in accordance with the sections and subsections of the studied discipline. The faculty member should make sure that the filling of each category with test tasks corresponded to a certain minimum level. Then it is possible to ensure, on the one hand, the possibility of randomly choosing a test task, and, on the other hand, covering the entire structure of the study material. We determined a certain empirical boundary: the minimum number of test tasks in any subcategory of the lowest level should be at least five. From our point of view, the implementation of this requirement helps to ensure the validity of testing. In practice, the total number of test tasks in the standard discipline can reach up to a thousand.

Before the beginning of a semester, the bank of questions already exists in one form or another. This allows for the initial configuration of the test structure on the discipline page Figure

First, semantic errors in setting up a test task are revealed. They are associated with the individual perception of the wording of the question (its meaning) by a specific person. This is the most difficult category of errors and can only be identified in practice. There are logical, technical and grammatical errors. Naturally, all identified defects should be eliminated.

Second, in the process of giving lectures and answering the student questions, additional previously unaccounted facts and circumstances are revealed. New ideas for formulating the question appear, which are implemented in the form of new test tasks. After inclusion in the bank, such test tasks will appear on exam papers. At the same time, some of the tasks can be excluded from the bank of questions based on the results of the analysis.

Third, the technique related to the use of an essay type question has proven itself. During one of the preliminary tests, students are invited to come up with their own test task. If it turns out to be successful (and the likelihood of such an event is very high), an extra point in the evaluation of the test results can be added to the student as an encouragement for this work.

The test tasks themselves (questions in Moodle terminology) are closed and open. In the first case, the task contains the main part and the answers formulated by the compiler. The instruction for the task indicates what exactly needs to be done, for example, select a button with the correct answer. This form is technologically advanced because it allows identifying specific knowledge, but it is the least protected from fraud attempts. In the second case (open form), the students themselves formulate a numerical, verbal or graphic answer. Both forms of test tasks are implemented in Moodle in various versions.

The classic test versions of the closed type are well known. They exist in the form of a certain statement and provide a test-taker with an opportunity to answer in the form of "true-false" Alternatively, the answer may be the number of the correct statement (statements). Their merit lies in the fact that they provide an objective check of knowledge. Such tasks are easily compiled and programmed, for example, based on traditional exam questions. Unfortunately, preparing for answers to such questions often boils down to remembering the correct answer option, which can equal to fraud. From our point of view, the number of such tasks should be minimized.

The open form tasks are of the greatest interest from the point of view of ensuring the safety of testing. These include tasks with a short answer, a numerical answer, calculated and multiple calculated questions.

The short answer task involves entering the text from the keyboard. The possibility of several options for the correct answers (synonyms) is provided. It is possible to check the correctness of the text entered from the keyboard by a given combination of initial letters, which allows ignoring declination and conjugation. The limiting form of this question is called an essay and involves the introduction of large enough texts that should later be checked by a faculty member and evaluated manually.

The task with a numerical answer provides for the student to conduct independent calculations and verify their correctness. Validation of the calculations is carried out by programming formula to calculate the correct answer based on the source data. The formula may include arithmetic operations, as well as some special functions from the PHP language library (Mathematical functions, n.d.). Several options for the correct answers may be provided and permissible calculation errors may be specified. As a result, it is possible to test the test-taker’s abilities to perform mathematical calculations, or, in the case of applied problems, test the professional skills of calculating the necessary parameters (current strength, power dissipation, memory capacity, etc.).

The calculated tasks are also focused on testing the skills of the test-taker and are a development of the idea of questions with a numerical answer. The difference is that a specific task is generated using a random number sensor. As a result, the calculated question can exist in hundreds of different variants, and the correct answer formula takes into account the current value of the arguments received from the random number sensor. Therefore, the Moodle system in its current version provides sufficient test programming capabilities to ensure reliable testing.

Findings

The infrastructure of a higher educational institution that is necessary for the functioning of the computer-based testing system is known.

Methods for assessing the quality of testing exist and can be used.

A set of technical, organizational and administrative methods has been developed aimed at reducing risks during testing.

There are technical and organizational capabilities for implementing the computer-based testing procedure.

The Moodle Learning Management System in its current version provides ample opportunities for programming test tasks to ensure the reliability of testing.

Conclusion

Ensuring the safety of the computer-based testing system is a complex task, affecting several areas of knowledge at once. The time requirements for the quality of training of future specialists make us approach the issue of ensuring the reliability of the graduate qualification assessment seriously. Obviously, the knowledge competence and knowledge intensity of production require, in turn, the knowledge competence and knowledge intensity of education. The solution of these issues goes into the practical plane and is impossible without the widespread use of information technology both for education and for monitoring its results. In one form or another in the near future, we will have to formalize the educational process. One option is the transition to the so-called case-study technologies as a method of practice-oriented learning (Gladkova et al., 2017). However, their use will be meaningless if we do not provide a sufficient level of testing safety, including its validity at all stages of testing. Note that if students were interested in obtaining knowledge in particular, and not just to “check the box” for the subject under study, the testing safety issues would be resolved faster and easier. However, to create such conditions in higher education, a set of completely different activities is required.

References

- Bodrunov, S. D. (2016). Gryadusheye. Novoye industrialnoye obshestvo: perezagruzka / Monografiya/ [The future. New industrial society: reboot] Institute of the New Industrial Development named after S. U. Vitte. [in Rus.].

- Chelyshkova, M. B. (2002). Teoriya i praktika konstruirovaniya pedagogicheskih testov: Uchebnoye posobiye [Theory and practice of constructing educational tests: Textbook]. Logos. [in Rus.]

- Freedocs (n.d.). https://freedocs.xyz/docx-437449951 [in Rus.]

- Gladkova, M. N., Kutepov, M. M., Luneva, U. B., Trutanova, A. V., & Yusupova, D. M. (2017). Tehnologiya keys-obucheniya v podgotovke bakalavrov [Case-study technology in the preparation of bachelors]. International Journal of Experimental Education, 6, 21-25. [in Rus.]

- Kim, V. S. (2007). Testirovaniye uchebnyh dostizheniy. Monografiya [Testing educational achievements. Monograph]. Ussuriysk State Pedagogical Institute. [in Rus.]

- Kononova, O. V., Moskaleva, O. I., & Stepanov, A. G. (2011). Riski, voznikayushiye pri provedenii testirovaniya sredstvami sistemy Moodle [Risks that arise during testing using the Moodle system]. Economics. Taxes. Law, 2, 202-208. [in Rus.]

- Kostylev, D. S., & Kutepova, L. I. (2017). Informatzionnyye tehnologii otzenivaniya kachestva uchebnyh dostizheniy obuchayushihsya [Information technology for assessing the quality of educational achievements of students]. Baltic Journal of Humanities, 63(20), 190-192. [in Rus.]

- Matematicheskiye funktzii [Mathematical functions] (n.d.). (PHP Group) http://php.net/manual/en/function.base-convert.php [in Rus.].

- Muratova, L. A. (2016). Validnost i diskiminativnost pri issledovanii i otzenke kachestva testa "Integralnoye vychisleniye" [Validity and discriminatory power in the study and assessment of the Integral Calculus test quality]. Scientific Almanac, 6-1(19), 323-326. [in Rus.]

- Nevdyaev, L. (2002). Telekomunnikatzionnyye tehnologii. Anglo-russkiy tolkovyi slovar-spravochnik [Telecommunication technologies. English-Russian explanatory dictionary]. Edited by U.M. Gornostayeva. Series of publications "Communication and Business". ICSTI - International Center for Scientific and Technical Information. LLC "Mobile Communications". [in Rus.]

- Prodazha Obrazovatelnyh Dokumentov Po Vsey Territorii Rf [Sale Of Educational Documents On All Of The Territory Of The Russian Federation] (n.d.). http://russia-diplomman.com/kupit-diplom-s-reestrom [in Rus.].

- Rubtsova, E. V. (2017.). Puti sovershenstvovaniya testovogo kontrolya sformirovannosti kompetentziy studentov vuza [Ways to improve the test control of the formed competencies of university students]. Karelian Scientific Journal, 6, 4(21), 81-84. [in Rus.]

- Ryabinova, E. N., & Bulanova, I. N. (2016). Monitoring uspevaemosti studentov s pomoshiyu pedagogicheskih testov [Monitoring the student performance using educational tests]. Science Vector of Togliatti State University. Series: Pedagogy, Psychology, 4(27), 16-20. [in Rus.]

- Shkerina, L. V., Keyv, M. A., Zhuravleva, N. A., & Berseneva, O. V. (2017). Metodika diagnostiki universalnyh uchebnyh deystviy uchashihsya pri obuchenii matematike [Diagnostic technique of universal educational actions of students in teaching mathematics]. Bulletin of the Krasnoyarsk State Pedagogical University named after V. P. Astafiev, 3(41), 17-29. [in Rus.]

- Smolyaninova, O. G., Trofimova, V. V., Moroz, A. A., & Matusevich, N. P. (2019). Vozmozhnosti sovershenstvovaniya uchebnogo protzessa s ispolzovaniyem LMS Moodle [Opportunities for improving the educational process using the LMS Moodle]. The Modern Scientist, 5, 116 - 121. [in Rus.]

- Yubko, A. A., & Efromeeva, E. V. (2019). Monitoring onlayn-testa v sootvetstvii s trebovaniyami pedagogicheskoy teorii izmereniy [Monitoring an online test in accordance with the requirements of the pedagogical theory of measurements]. Scientific Almanac, 4-2(54), 88-92. [in Rus.]

Copyright information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

About this article

Publication Date

21 October 2020

Article Doi

eBook ISBN

978-1-80296-089-1

Publisher

European Publisher

Volume

90

Print ISBN (optional)

-

Edition Number

1st Edition

Pages

1-1677

Subjects

Economics, social trends, sustainability, modern society, behavioural sciences, education

Cite this article as:

Stepanov, A. G., Plotnikov, G. A., & Kosmachev, V. M. (2020). Assurance Of Safety Of Computer-Based Testing System For Midterm Knowledge Assessment. In I. V. Kovalev, A. A. Voroshilova, G. Herwig, U. Umbetov, A. S. Budagov, & Y. Y. Bocharova (Eds.), Economic and Social Trends for Sustainability of Modern Society (ICEST 2020), vol 90. European Proceedings of Social and Behavioural Sciences (pp. 1473-1485). European Publisher. https://doi.org/10.15405/epsbs.2020.10.03.170